What is a Product Development Strategy

Table of Contents

TLDR

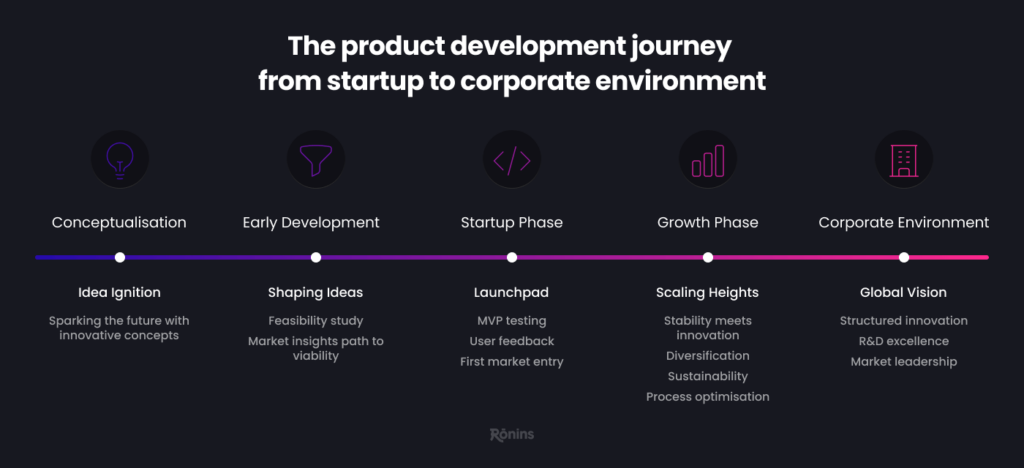

A product development strategy for web or software projects maps your idea from concept through to ongoing updates. It starts with user research, basic market checks, and a clear definition of the product’s main purpose. By setting milestones, dividing tasks, and clarifying roles, you keep your entire team aligned and focused.

Building a small MVP first helps you gather direct feedback and refine your direction. From there, iterative processes like Agile or Scrum, alongside continuous testing, streamline delivery. Once launched, real user data guides incremental changes, ensuring the product stays relevant, addresses user concerns, and adapts to new demands over time.

What Is a Product Development Strategy for Web or Software Projects?

Introduction

I’ve spent more than twenty years involved in web and software projects. Over that time, I’ve collected a library of mistakes, breakthroughs, and lessons learned. My teams and I often pushed out products in the early years with little planning. Some flopped, some scraped by, and a few found success. The successful ones had a clear roadmap, even if it wasn’t fancy, and they kept end users in mind.

Those experiences led me to understand that a successful product development strategy isn’t a luxury. It’s vital. It isn’t just a timeline or a list of tasks. It’s an iterative framework that clarifies why you’re building a product, how you’ll do it, who you’ll do it for, and what success looks like. In this article, I’ll detail each element of that framework, weaving in personal observations and a few cautionary tales. By the end, I hope you’ll have a detailed perspective on building, improving, and maintaining digital products that resonate with real users.

You’ll see me reference “web or software projects.” I use that phrase to cover anything from a small plugin or mobile app to a massive enterprise platform. The scale might differ, but the core principles remain the same. My philosophy is that the right strategy aligns your team, clarifies the product vision, and sets a clear path to a functional release. Let’s start by defining what we’re discussing, then delve into the steps shaping the journey.

• • •

Defining Product Development Strategy

What It Means to Me

A product development strategy is like a compass for a journey. It points in the direction you want to go and helps you chart a path from idea to launch (and beyond). In the context of web or software projects, it outlines:

- The problem you’re solving (e.g., streamlining project management, connecting users for social interaction, simplifying e-commerce transactions).

- The people you’re building it for (target audience, their needs, habits, and constraints).

- The technical and creative methods you’ll use (frameworks, coding languages, server setups, design styles).

- How will you know if you’re succeeding? (marketing strategy, user feedback, metrics, revenue, retention).

Without a strategy, it’s easy to jump into coding or design only to realise halfway through that there’s no demand or that you’ve misjudged user needs. I’ve seen brilliant developers build impressive features that sat idle because they didn’t match real customer problems. A well-defined approach avoids that trap by forcing you to think everything through before investing months of development time.

Why It’s Not Just a Project Plan

A common misconception is that a product development strategy is the same as a project plan. A plan typically tells you the “when” and “who” of tasks. That’s important, but a strategy also addresses “why” and “how.” It connects each milestone to a user-driven goal or a marketplace requirement. Rather than a static document, it’s a living reference point that can evolve as you gather new data or adapt to changing conditions.

Early in my career, my teams often used Gantt charts and timelines as our primary planning tools because that was all there was. While those are handy for scheduling, they rarely address the broader reasoning behind each phase. We had no blueprint for which features mattered most to users or whether we’d tested any assumptions. That gap became painfully clear when the final product launched, and user response was lukewarm. Once we started including user research, a deeper exploration of market gaps, and success metrics in our documentation, our outcomes improved noticeably.

The Value of Adaptability

No matter how well you plan, the digital environment shifts quickly. Competitors appear with new features, user expectations evolve, or unforeseen technical issues arise. A good strategy accounts for unpredictability by staying flexible. That doesn’t mean you constantly pivot at the first sign of trouble. However, you allow incremental updates to your approach based on user feedback, analytics, or changing priorities.

I am currently working with an AirBNB-style platform. When we started the journey, the single-minded proposition was all about bookings. We launched with particular features and analytics in mind. We quickly discovered that the revenue streams were not in bookings alone due to the seasonal nature of the audience, so we pivoted into features that drove subscriptions. Instead of doubling down on the booking, we adapted our roadmap to strengthen the features set people were using and gave the customer what they wanted. That decision came directly from a willingness to pivot when user patterns didn’t match our initial assumptions, and we changed the business strategy.

• • •

Early Planning: Concepts, Research, and Validation

Turning Ideas into Defined Product Concepts

The earliest step in your strategy is brainstorming or idea generation. You might have a simple suggestion from a colleague or an in-depth pitch from a product manager. Either way, you need to refine it into a coherent concept. That means asking yourself (and your team) key questions:

- Who will use this?

- What exact need does it serve?

- Why does this matter right now?

At this stage, I like to keep the conversation open. I gather input from every function: design, engineering, support, and leadership. Each person might bring unique insights or concerns. Perhaps the developer sees a serious technical hurdle. Maybe the designer foresees a user experience challenge. The developers always find something; possibly, the support lead knows that customers often request a particular feature. You combine these angles into a single statement of purpose for the product.

Practical Market Exploration

Once you’ve framed your concept, it’s time to see if it resonates in the real world. This is called market research. I typically start by looking at the existing market and what competitors or adjacent solutions are out there. For instance, if I build an online collaboration tool, I’ll explore existing products on major and minor platforms. I’ll note what they do well, where they falter, and whether their users still have unmet needs.

To gather market insights, you can read blog posts or community discussions (like on Reddit or product-focused forums) to see what users complain about or praise. You can also use modern artificial intelligence tools like Perplixity, Open AI, and Anthropic to help you perform this. Some people conduct formal surveys to gather customer feedback. Others prefer focus groups or one-on-one interviews with potential customers. I’ve had success doing both. A 15-minute chat with a key user sometimes reveals more actionable information than a broad, generic survey. The goal is to confirm that your concept has a genuine place in the market and that it isn’t just a solution looking for a problem.

Understanding the Gap

Try to pinpoint the gap you intend to fill. Are you offering a simpler interface? A more cost-effective solution? Deeper integrations with popular services? Unique visual flair? (Remember to keep your claims grounded—if you say you’re doing something nobody else does, verify that thoroughly.)

Suppose you discover a competitor dominating the market, known for slow customer support. That’s a potential gap. Or you find out that most competitors lack a mobile-friendly experience. That’s another. You don’t always have to create something brand-new. Often, you need to address an overlooked but significant user issue.

Validating Assumptions with Rapid Testing

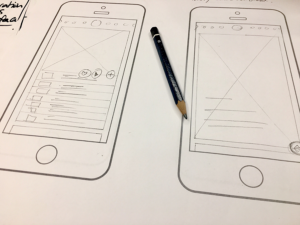

Before plunging into months of development, quickly validate your main assumptions. This might mean building a prototype or designing a clickable demo. It could also involve conceptual wireframes you show to a small group of prospective users. If you’re coding, keep it minimal. The key here is to confirm (or refute) your concept’s viability with minimal resources.

• • •

Building a Concrete Roadmap

Milestones and Phases

After validating your product idea, you and your product team are ready to plan the entire journey from concept to launch. I like to split the development process into clear phases. You might define them as:

- Alpha: Where you create a rough version with basic features.

- Beta: Where you refine those features, add some polish and open up testing to a slightly larger group.

- Release Candidate: This is where you fix critical bugs, handle final refinements, and prepare for the official launch.

- Launch: The version you share widely.

- Post-Launch Updates: Ongoing refinements, expansions, or additional features.

Each phase has goals, like finishing a certain feature set or passing a performance test. Breaking it down this way stops you from trying everything simultaneously. It also helps your team see where they fit in. Developers know which tasks they need to tackle first. Designers know when to finalise the interface. Marketing knows when to start outreach.

Timelines and Managing Risks

One of the hardest parts of any plan is the timeline. Developers might say a feature will take three days, but hidden complications can push that to three weeks. Designers might underestimate how long user feedback cycles take. To offset this, I build buffers into each phase. If you think something will take six weeks, give it seven or eight. That safety net means you’re not always in panic mode when reality conflicts with estimates.

In parallel, consider your risks. You might worry about a web application’s server load or potential security flaws. For a complex internal system, you might have dependencies on other teams or older code that’s fragile. Document these risks and note the likelihood of each. Then, outline a fallback plan if something goes wrong. It’s not about being paranoid—it’s about being prepared. If you see trouble brewing, you can pivot or reassign resources quickly.

Setting Clear Roles

A roadmap is more than tasks and deadlines. It clarifies who owns each piece of the puzzle. In many cases, you’ll have a product owner who handles feature priorities, a lead developer who manages technical decisions, a UX designer who refines user flows, and a QA engineer who tests everything. One person might wear multiple hats if you’re in a smaller team. That’s fine, as long as responsibilities are spelt out. Without role clarity, tasks can slip through the cracks.

• • •

The Minimum Viable Product (MVP)

Why Start Small?

An MVP is the lean version of your product that focuses on your core value proposition. It lets you test the waters without over-investing in features that might be irrelevant. I’m a big believer in this approach. It’s all about validating assumptions in the real world. I’ve seen teams that skip the MVP because they want to release something “perfect” right away. Usually, that leads to months (or years) of development, only to launch and find out people don’t need half the features.

Prioritising Essential Features

Deciding what goes into the MVP product development cycle can be tricky. You might want many bells and whistles, but you must be ruthless. This is often called business analysis. Ask yourself, “If we remove this feature, does the product still address the main user problem?” If yes, then maybe it’s safe to wait. If you’re building a task management app, perhaps the MVP is just creating tasks, assigning them to users, and marking them complete. Leave advanced reporting or complex workflows for later. Your priority is to confirm that your idea stands on its own feet.

Iteration and Incremental Releases

Once you launch the MVP, user feedback becomes priceless. Embrace it. Make sure you have channels—like an in-app survey, a feedback form, or an email for suggestions—so people can tell you what they like or dislike. Incorporate that feedback into quick updates. Some teams push new builds weekly or even daily. That iterative rhythm helps you adapt your product to real-world usage. You can add new features once the basic ones are stable. Or you might pivot if users reveal a better direction.

• • •

Process Frameworks: Agile, Scrum, and Kanban

Why Iterative Development Fits Software

Software is never truly “done.” Even if you reach version 1.0, there’s usually a version 1.1 or 2.0 on the horizon. That’s why iterative methods like Agile in the product development process are so popular. They let you release features in small cycles (often called sprints), gather feedback, and tweak direction if needed.

I used to run projects in a more traditional Waterfall manner: you plan everything upfront, then design, develop, then test, then launch. While this can work for smaller projects, it can be risky for bigger ones. If market conditions shift or user expectations change halfway, you’re locked into a rigid plan. Agile methods, on the other hand, encourage short cycles—maybe two weeks each—where you code a handful of features, test them and evaluate the next steps.

Scrum Basics

Scrum is a popular Agile framework. You maintain a backlog of tasks (user stories, bugs, improvements), prioritise them in a sprint backlog, and tackle them in a set time frame (e.g., two weeks). Each day, your team holds a quick stand-up meeting to sync. At the end, you review progress in a sprint review and gather lessons in a retrospective.

Key roles in Scrum often include:

- Product Owner: Oversees the backlog and feature priorities.

- Scrum Master: Manages the process, clears roadblocks, and ensures good team dynamics.

- Development Team: Builds and tests the product increment.

I’ve succeeded with Scrum in projects where frequent feedback cycles are needed. It keeps the team aligned, and the retrospectives help refine our approach to product development. The biggest challenge is writing user stories that capture real user value rather than tasks that are too technical or vague.

Kanban as an Alternative

Kanban uses a board with columns like “To Do,” “In Progress,” “Qa”, and “Done.” You move tasks through these columns as work unfolds. Instead of time-bound sprints, you might work on tasks continuously and limit the number of in progress at once. This approach can be more straightforward if your existing resources deal with frequent incoming requests and need flexibility.

I once led a support-heavy development team that found Kanban a better fit. We never knew how many bug reports or small features would arrive weekly. Instead of planning sprints, we managed a rolling backlog and kept the “In Progress” column limited to what our team could handle at once.

• • •

Team Structure and Collaboration

The Value of Cross-Functional Teams

Web or software development involves various skills: coding, database management, user experience design, project oversight, testing, and sometimes marketing or content creation. A cross-functional team brings these talents together from day one. Instead of developers working in isolation and later handing the product over to QA, they collaborate in real time. That synergy often unearths issues before they get too big.

I’ve observed a significant shift in team morale when everyone feels ownership. If a designer points out a performance bottleneck or a developer spots a user-interface flaw, that’s a win. You tap into the group’s collective knowledge, and each person learns from the others. This is especially valuable in smaller startups, where people wear multiple hats.

Making Roles Clear

Even in a cross-functional setup, clarity about who decides what is essential. For instance, a lead developer might handle architectural decisions, but the product owner sets feature priorities. A QA engineer might oversee test cases, but a feature developer fixes the bugs. If roles are murky, tasks may be duplicated, or decisions might get stalled. Establishing a RACI (Responsible, Accountable, Consulted, Informed) matrix can help. It’s a simple chart indicating each participant’s level of involvement in different tasks.

Communication in Remote vs. On-Site Teams

Remote work has boomed in the tech world. It has advantages like tapping a global talent pool, but it also demands disciplined communication. Regular video calls, quick chat tools, and thorough documentation become vital. Time zone differences can also complicate daily stand-ups or quick feedback loops. I once coordinated a team in three continents, with a 13-hour gap between some members. We relied on asynchronous updates and careful scheduling to keep momentum.

On-site teams, on the other hand, can do spontaneous discussions. That’s great for speed but can lead to undocumented decisions if you’re not careful. I’ve learned to encourage teams, remote or on-site, to keep a short record of significant decisions. A quick Slack update or a shared document can prevent confusion later.

• • •

Testing, Quality Control, and Continuous Integration

Multiple Layers of Testing

Thorough testing is the backbone of a stable release. I break it down into several layers:

- Unit Tests: Validate small code components or functions. If you have a function that calculates shipping costs, a unit test checks different scenarios.

- Integration Tests: Confirm that separate modules (e.g., frontend and backend APIs) work together.

- End-to-End Tests: Simulate real user journeys, from logging in to completing an action.

- Performance Tests: Check how the system handles loads.

- Security Tests: Seek vulnerabilities or possible attacks.

Skipping any layer can leave you blind to lurking problems.

Automation vs. Manual Testing

Automation is perfect for repetitive tasks. Automated scripts can run unit and integration tests to detect regressions every time you push code. That practice, known as continuous integration (CI), alerts you to issues within minutes. If a developer merges a change that breaks a function, the test pipeline will fail, and the team can fix it before it spreads.

Manual testing, meanwhile, excels at capturing nuances a machine can’t. A tester might notice a button that’s visually misaligned or a confusing step in the user workflow. They can also do exploratory testing, trying odd combinations of inputs or processes that automated scripts might not cover. I believe in mixing both: relying on automation for quick feedback loops and then using manual exploration to check for subtle usability or logic errors.

Issue Tracking and Continuous Deployment

All identified bugs or improvement requests should go in a shared system like Jira, GitHub Issues, or any similar platform. That centralises the backlog and helps you prioritise. Some teams practise continuous deployment, where every passing build is automatically pushed to production if it meets certain conditions. It’s a bold approach that demands robust testing and rollback procedures. It works best in systems with normal frequent updates, like a consumer-facing SaaS. In other scenarios, you might prefer a scheduled release cycle for stability.

• • •

Security and Performance Factors

Secure by Default

Security often gets overlooked until a breach or exploit appears. I’ve been in projects where encryption, secure authentication, and proper data storage were afterthoughts. That’s risky. If your product handles user credentials or sensitive data, you should prioritise safe coding practices. That can mean hashing passwords, validating inputs to avoid SQL injection, and reviewing libraries for known vulnerabilities.

Performance Matters

Users have little patience for slow load times or clunky performance. Keep an eye on system metrics. If you host on the cloud, consider scaling solutions early—auto-scaling groups, load balancers, or distributed databases. Use analytics to track page speeds or response times. Users may leave for good if they see a blank screen for too long. I’ve noticed that shaving even a second off-page load can boost retention.

Regulatory Considerations

Depending on your domain, laws and rules might apply (GDPR for data privacy, HIPAA for health info, etc.). Failing to meet these standards can lead to fines or lawsuits. Whenever I start a project in a regulated field, I bring legal or compliance experts into the conversation early. If you wait until launch, you might have to rebuild entire parts of your product.

• • •

Launch and Deployment

Preparing for the Big Day

As you near a release, there’s a flurry of activity. You freeze features, finish final tests, and stabilise the code. Then, you set a specific launch day or window. I recommend alerting your marketing team, support staff, and other stakeholders. They should be ready to handle inquiries, bug reports, or downtime. Confirm you can handle the traffic spike if you plan a big promotional push.

Deployment Strategies

You can approach deployment in different ways:

- All-at-Once (Big Bang): Everything goes live simultaneously. This can be dramatic but leaves no safety net.

- Staged Rollout: You release to a small subset of users, monitor metrics, and expand if all goes well.

- Beta Release: You keep the product “in beta” even if it’s mostly complete, letting early adopters try it and provide feedback.

I remember a client who chose a Big Bang release for a newly rebuilt e-commerce store before a holiday sale. Given the potential for huge traffic, a staging environment might have been wiser. They ended up dealing with performance hitches in real-time while customers tried to shop, which was stressful for everyone involved.

Handling Late-Stage Discoveries

Even with rigorous testing, it’s common to find last-minute problems. Maybe a rare user device triggers a bug, or a certain data set wasn’t tested. If the issue is serious, you might need to delay or do a hotfix immediately. Communication is crucial. If you’re transparent with users about a hiccup, many will be patient, especially if you fix it promptly. If you hide problems or respond slowly, you’ll damage trust.

• • •

Post-Launch Maintenance and Growth

Early Days After Launch

The first few weeks post-launch can feel like a whirlwind. Users may discover flaws they never saw. Keep your ear to the ground. If relevant, engage on forums or social media and have a quick response loop for bug reports. If you gather usage metrics, watch them to see where drop-offs happen or which features remain underused.

I would also like to have a “lessons learned” meeting a couple of weeks after the launch. Everyone discusses what worked and what didn’t. Perhaps the team found the code freeze too short or discovered that marketing started too late. Document those points for future releases. Over time, you build an internal knowledge base that makes launching each new product smoother.

Ongoing Updates

The digital world is never static. You’ll add features, refine existing ones, and handle new requirements. Maybe you start with monthly sprints or quarterly updates. Maybe you push continuous patches. The important part is that you keep the product evolving to remain relevant. If your user base is large, you might have a separate staging environment for big changes before rolling them out.

Back then, “launch” was often considered the finish line. But these days, launch is often a midpoint in a product’s lifecycle. The feedback loop remains crucial. Keep talking to users, reading analytics, and seeing how your product fits the changing market.

Scaling Over Time

If your product grows significantly, scaling might become a top priority. You could need database sharding, load balancing, or microservices to handle heavy loads. You might also expand your team, adding specialised roles like site reliability engineers or data scientists. It’s a good idea to plan for this from the beginning. Even a small note in your early strategy can help you avoid painting yourself into a corner with architecture that can’t scale well.

• • •

Measuring Success

Key Metrics to Track

You can’t improve what you don’t measure. Depending on your product, the critical metrics differ. Here are a few common ones for web or software projects:

- Active Users (Daily or Monthly): How many people use the product regularly?

- Session Length or Time on App: Do users engage for a decent duration or bounce quickly?

- Retention Rates: Do people come back after trying it once?

- Conversion Rates: How many trial users become paying customers if it’s a paid product?

- Churn Rates: How many users stop using or cancel the service over time?

- Error Rates and Crash Logs: Are there frequent crashes or script errors?

Choose metrics that relate to your product’s real goals. For example, if the main goal is revenue, track conversions and average spending. If it’s engagement, focus on active usage and session times. If it’s lead generation, watch sign-up funnels.

Setting Targets

Once you pick metrics, define what success looks like. For instance, if you aim for 10,000 monthly active users by the end of the quarter, that’s a concrete target. You might also set sub-goals like “reduce bounce rate from 40% to 20% within three months.” Having targets creates a yardstick to measure performance and helps the team celebrate milestones.

Iteration and Analytics

Use analytics tools, such as Google Analytics or more advanced platforms, to watch how users behave in real time for websites. For mobile apps, you might integrate Crashlytics or other in-app analytics. The data reveals patterns: maybe many people drop off at a certain form step, or they rarely click a particular feature. Adjust accordingly. One of my favourite routines is a weekly metrics review, where we track user data and discuss the next small tweak or test we should run.

Common Pitfalls

Even the best-laid plans can run into problems. I’ve seen these pitfalls more times than I can count:

Overloading Version 1.0

If you try to include every possible feature in your first release, you risk endless delays and a cluttered experience. Users often prefer a sleek, simpler product that works well rather than a sprawling one with rough edges.

Underestimating Technical Debt

Sometimes you code quick fixes to meet deadlines, planning to clean them later. But “later” never comes, and the codebase becomes harder to maintain. Setting aside some development time each sprint to address debt keeps your foundation stable.

Vague Roles and Responsibilities

When it’s unclear who signs off on a feature or who merges pull requests, tasks get stuck. Or worse, unapproved changes slip in. Clarity is crucial for both accountability and smooth progress.

Delaying Security and Performance Checks

If you bolt on security at the end, you might have to redo large portions of your application. The same goes for performance. By making these considerations part of your initial design, you avoid major rework.

Ignoring User Feedback

Building in a vacuum doesn’t help anyone. If you dismiss user comments or skip user testing, you might end up with a product that meets internal opinions but not real external needs.

• • •

Myth-Busting and Frequent Questions

“We Don’t Need a Strategy—We’ll Just Build It”

I used to hear this a lot, especially in small startups. While spontaneity can work for a quick hackathon, a real product with lasting impact needs at least some structure. A short strategy is still better than none.

“We Can Only Launch When Everything Is Perfect”

Perfectionism can drag you into an endless cycle of tweaks. I advocate for releasing a solid, functional version, then enhancing it regularly. Users appreciate quick improvements more than indefinite waiting.

“Agile Means We Don’t Plan”

Agile frameworks still involve planning, they just do it in shorter cycles and respond to fresh data. You’ll have a backlog, you’ll groom tasks, and you’ll set sprint goals. It’s structured, but flexible.

FAQ: How Long Should an MVP Take?

It varies widely by product and team. Some MVPs come together in a few weeks, others might need a few months. The principle is to build the smallest version that answers your critical questions about user interest.

FAQ: Do I Need a Dedicated QA Team?

It depends on your scale. If you have multiple developers pushing code daily, a dedicated QA engineer or team speeds up detection of issues. On smaller teams, developers might handle testing, but it’s wise to assign that role clearly and treat it as a priority.

Case Studies (More In-Depth)

I’ll include three broader examples from my past, illustrating different project sizes and outcomes.

• • •

Case Study A: An AirBNB style platform for Fisheries

Concept: A booking system and CRM system for Fisheries

Team Size: six , including me as a product strategist.

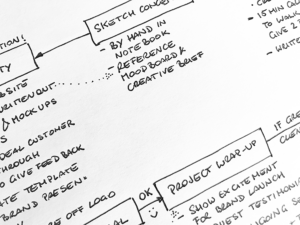

Approach: The client and I mapped out the entire market, personas, customer needs, user journeys and low fidelity wireframes to align the team of what the MVP would consist of.

Key Lesson: Lets start with the negative, whilst the research we performed at the time was thorough we miss calculated the booking fees which left revenue on the table. Now for the positive, I opted to try a new out a new product development strategy with this client and it really worked, the process was smooth, the entire team understood the vision ( mainly to introducing the low fidelity wireframe element earlier ) and this helps kept the team on the ruthless focus of MVP whilst creating a backlog for phase 2.

Outcome: Solid growth in the first 18 months, which led to revenue being used to tackle features for the anglers outside of bookings. That platform is still going today and going from strength to strength.

Case Study B: To build the largest Sharepoint platform in the UK for the healthcare industry sponsored by Microsoft.

Concept: Align three distinct and separate business divisions with the companies mission, vision and values.

Team Size: Around 18, including Stakeholders, Project Managers, Strategists, Brand Specialists, UX and UI designers, Backend devs, Frontend devs, QA and a Product Owner

Approach: This was my first truly project using the agile methodology with two-week sprints, daily stand-ups, and a continuous integration pipeline. The biggest challenge was dealing with legacy data structures and systems that lacked clear documentation with all client knowledge lost as people moved on.. We prioritised rewriting the core modules first, then layering on new features.

Key Lesson: Midway, we realised the biggest issue what tahat SharePoint in the cloud was a limited version of what was possible on premise. The devs took a couple of sprints to prototype an idea of how they could overcome this. The key lesson here is that the technology was chosen before the project started and the alignment of that platform to the business requirements were not aligned.

Outcome: The platform rolled out in phases and a month past the original planned launch date. However the platform was such a success it achieved all the companies goals whilst simultaneously being a showcase for the companies sponsor.

Case Study C: My first MVP that I thought was not strong enough

Concept: A curriculum management platform for the Department of Education

Team Size: On our side we had Three, our client, our founder, and me the sole young and naive developer working on their first business project at the age of 19.

Approach: This was in the days before the internet, the days before a mouse and windows, yes the back is DoS days. I was given a specification created by the DoE and asked to create a prototype in 8 weeks and report back to their head office in Sheffield to demo our progress. I was so eager to impress and worried that what I have built enough that I often worked through the night and was even developing the system on a really chunky laptop in the back of my bosses car as we drove from Surrey to Sheffield at 5 in the morning.

Key Lesson: This one is personal lesson, and it’s one about thinking you’re not good enough or could have done better. The reality was neither of those were true

Outcome: After presenting the software I had developed at the age of 19 to nearly 20 grey haired gentlemen is crisp suits. One of them said to me thank you. I had achieved more in 8 weeks than their previous company did in 18 months. They loved it and their vision was coming to reality. There was no process here, no methodologies followed and the product still out well. So maybe it’s all in the quality of the team.

• • •

Conclusion

Developing web or software products is rarely straightforward. It involves understanding user needs, marshalling diverse skills, and adapting to new findings. A product development strategy ties those threads together. It’s a living guide that walks you through every stage: brainstorming ideas, validating them in the market, building an MVP, refining your architecture, collaborating with cross-functional teams, testing for quality, securing your data, planning a launch, and iterating post-launch based on real metrics.

From my experience, the teams that succeed aren’t always the ones with the biggest budgets or the flashiest tech. They’re the ones that stay focused, communicate effectively, and monitor user feedback. They understand that shipping a product is an ongoing conversation between creators and users—one that benefits from structure but also from the willingness to pivot or expand when facts change.

If you’re starting a new software or web initiative, I encourage you to map a roadmap and define what matters most. Begin small, get something tangible into users’ hands, and refine as you go. Stay open to criticism and data that might contradict your original plans. Over time, that combination of clarity and flexibility will elevate your product.

Thank you for reading about what makes a complete product development strategy. I hope these insights, drawn from my personal experiences, help you tackle your next project confidently. If you have questions or want to compare notes, feel free to reach out. Building a strong product is both an art and a discipline, and each project we undertake teaches us a bit more about how to strike that balance.